The requirement to test AI models, keep humans in the loop, and give people the right to challenge automated decisions made by AI are just some of the 10 mandatory guardrails proposed by the Australian government as ways to minimise AI risk and build public trust in the technology.

Launched for public consultation by Industry and Science Minister Ed Husic in September 2024, the guardrails could soon apply to AI used in high-risk settings. They are complemented by a new Voluntary AI Safety Standard designed to encourage businesses to adopt best practice AI immediately.

What are the mandatory AI guardrails being proposed?

Australia’s 10 proposed mandatory guardrails are designed to set clear expectations on how to use AI safely and responsibly when developing and deploying it in high-risk settings. They seek to address risks and harms from AI, build public trust, and provide businesses with greater regulatory certainty.

Guardrail 1: Accountability

Similar to requirements in both Canadian and EU AI legislation, organisations will need to establish, implement, and publish an accountability process for regulatory compliance. This would include aspects like policies for data and risk management and clear internal roles and responsibilities.

Guardrail 2: Risk management

A risk management process to identify and mitigate the risks of AI will need to be established and implemented. This must go beyond a technical risk assessment to consider potential impacts on people, community groups, and society before a high-risk AI system can be put into use.

SEE: 9 innovative use cases for AI in Australian businesses in 2024

Guardrail 3: Data protection

Organisations will need to protect AI systems to safeguard privacy with cybersecurity measures, as well as build robust data governance measures to manage the quality of data and where it comes from. The government observed that data quality directly impacts the performance and reliability of an AI model.

Guardrail 4: Testing

High-risk AI systems will need to be tested and evaluated before placing them on the market. They will also need to be continuously monitored once deployed to ensure they operate as expected. This is to ensure they meet specific, objective, and measurable performance metrics and risk is minimised.

Guardrail 5: Human control

Meaningful human oversight will be required for high-risk AI systems. This will mean organisations must ensure humans can effectively understand the AI system, oversee its operation, and intervene where necessary across the AI supply chain and throughout the AI lifecycle.

Guardrail 6: User information

Organisations will need to inform end-users if they are the subject of any AI-enabled decisions, are interacting with AI, or are consuming any AI-generated content, so they know how AI is being used and where it affects them. This will need to be communicated in a clear, accessible, and relevant manner.

Guardrail 7: Challenging AI

People negatively impacted by AI systems will be entitled to challenge use or outcomes. Organisations will need to establish processes for people impacted by high-risk AI systems to contest AI-enabled decisions or to make complaints about their experience or treatment.

Guardrail 8: Transparency

Organisations must be transparent with the AI supply chain about data, models, and systems to help them effectively address risk. This is because some actors may lack critical information about how a system works, leading to limited explainability, similar to problems with today’s advanced AI models.

Guardrail 9: AI records

Keeping and maintaining a range of records on AI systems will be required throughout its lifecycle, including technical documentation. Organisations must be ready to give these records to relevant authorities on request and for the purpose of assessing their compliance with the guardrails.

SEE: Why generative AI projects risk failure without business understanding

Guardrail 10: AI assessments

Organisations will be subject to conformity assessments, described as an accountability and quality-assurance mechanism, to show they have adhered to the guardrails for high-risk AI systems. These will be carried out by the AI system developers, third parties, or government entities or regulators.

When and how will the 10 new mandatory guardrails come into force?

The mandatory guardrails are subject to a public consultation process until Oct. 4, 2024.

After this, the government will seek to finalise the guardrails and bring them into force, according to Husic, who added that this could include the possible creation of a new Australian AI Act.

Other options include:

- The adaptation of existing regulatory frameworks to include the new guardrails.

- Introducing framework legislation with associated amendments to existing legislation.

Husic has said the government will do this “as soon as we can.” The guardrails have been born out of a longer consultation process on AI regulation that has been ongoing since June 2023.

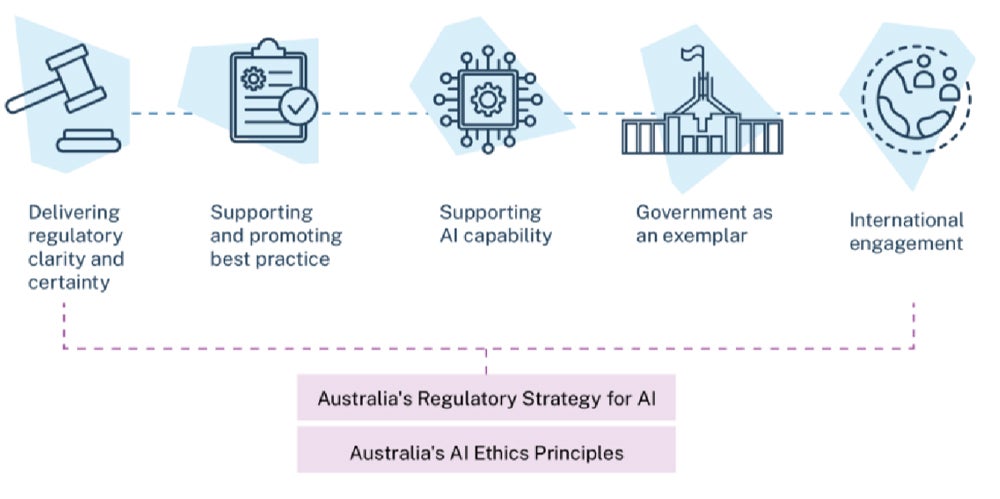

Why is the government taking the approach it is taking to regulation?

The Australian government is following the EU in taking a risk-based approach to regulating AI. This approach seeks to balance the benefits that AI promises to bring with deployment in high-risk settings.

Focusing on high-risk settings

The preventative measures proposed in the guardrails seek “to avoid catastrophic harm before it occurs,” the government explained in its Safe and responsible AI in Australia proposals paper.

The government will define high-risk AI as part of the consultation. However, it suggests that it will consider scenarios like adverse impacts to an individual’s human rights, adverse impacts to physical or mental health or safety, and legal effects such as defamatory material, among other potential risks.

Businesses need guidance on AI

The government claims businesses need clear guardrails to implement AI safely and responsibly.

A newly released Responsible AI Index 2024, commissioned by the National AI Centre, shows that Australian businesses consistently overestimate their capability to employ responsible AI practices.

The results of the index found:

- 78% of Australian businesses believe they were implementing AI safely and responsibly, but in only 29% of cases was this correct.

- Australian organisations are adopting only 12 out of 38 responsible AI practices on average.

What should businesses and IT teams do now?

The mandatory guardrails will create new obligations for organisations using AI in high-risk settings.

IT and security teams are likely to be engaged in meeting some of these requirements, including data quality and security obligations, and ensuring model transparency through the supply chain.

The Voluntary AI Safety Standard

The government has released a Voluntary AI Safety Standard that is available for businesses now.

IT teams that want to be prepared can use the AI Safety Standard to help bring their businesses up to speed with obligations under any future legislation, which may include the new mandatory guardrails.

The AI Safety Standard includes advice on how businesses can apply and adopt the standard through specific case-study examples, including the common use case of a general purpose AI chatbot.